The Rationale behind dVRK-XR

21 Jun 2019da Vinci Research Kit (dVRK) has created a successful open source community for surgical robotics. To date, dVRK has been installed in more than 35 research institutes around the world.

Motivation

With promising mixed reality hardware and software entering the market, researchers in this community started to explore the possibility of combining mixed reality with robotic interventions. dVRK-XR is an open source extension of dVRK, aiming at extending the research spectrum of the dVRK community. The word XR means Extended Reality. dVRK-XR will facilitate the integration of da Vinci robot and various mixed reality platforms.

The source code of dVRK-XR is hosted on Github: https://github.com/jhu-dvrk/dvrk-xr. A paper about the package is published at the 2019 Hamlyn Symposium on Medical Robotics. In this post, I would like to discuss more about the design rationale behind dVRK-XR.

Sample Videos

In order to prove that dVRK-XR is indeed cross-platform. We tested it on:

- Unity editor on Mac Youtube

- Microsoft HoloLens Youtube

- Samsung Odyssey Youtube

- Android Phone Youtube

- Android ARCore Youtube1 Youtube2

- Google Cardboard Youtube

Key Considerations

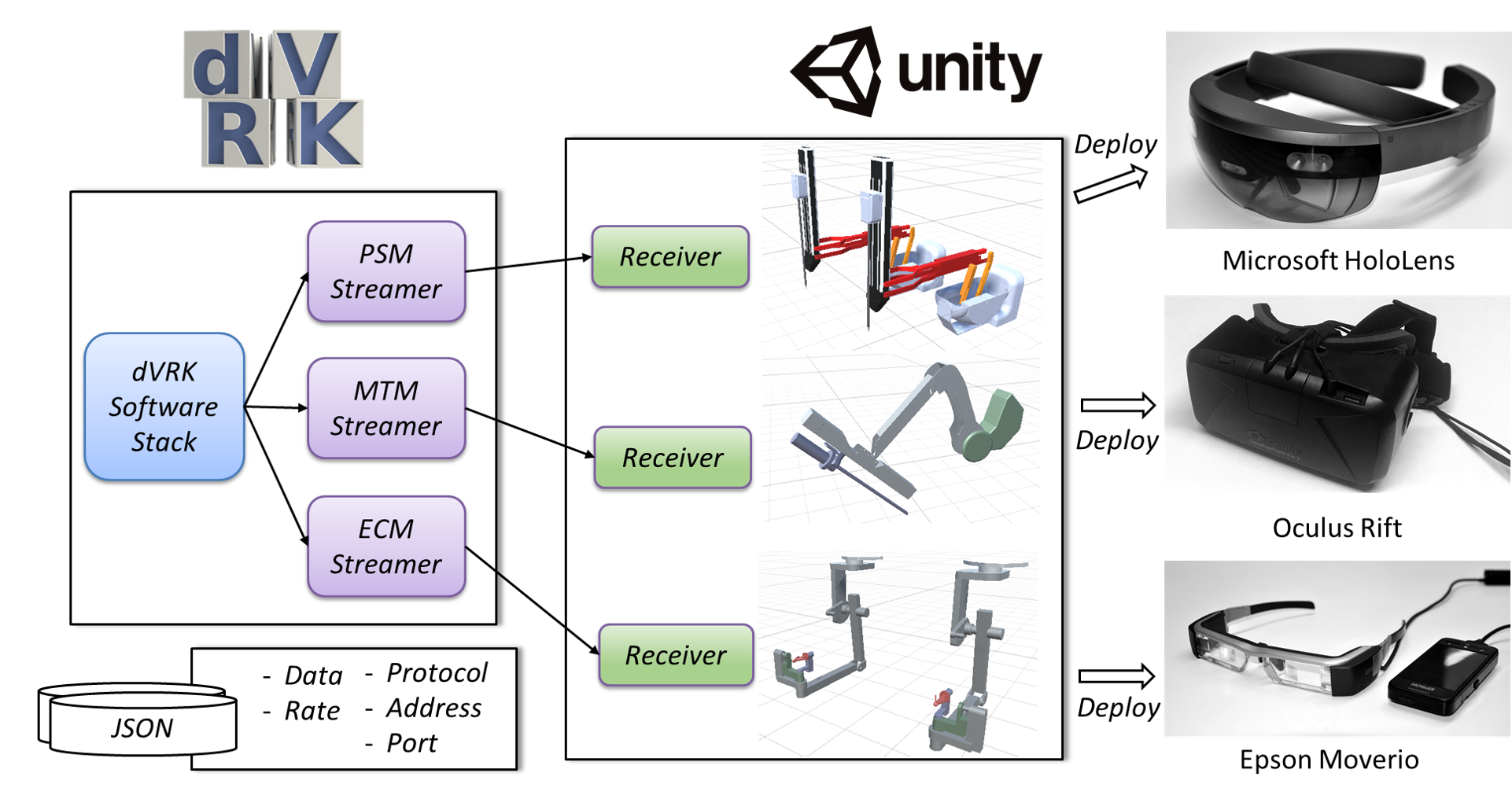

The system architecture of dVRK-XR is shown below:

I would like to share some of my thoughts while designing and implementing dVRK-XR.

Two JSON Configuration Files

To run dVRK-XR, there are two configuration files, one already being used for dVRK, the other one used to configure sawSocketStreamer.

We use sawSocketStreamer as the UDP server for mixed reality client. As the name indicates, it is a standard component of the JHU CISST/SAW framework. When the dVRK or da Vinci starts, sawSocketStreamer, as a component, can be linked with the main program, via a configuration file of dVRK. Here is the example.

Another configuration file, controls the behavior of the sawSocketStreamer, for example, the destination and the data to stream. Here is the example.

With the two configuration file, the streaming behavior is controlled each time before the program starts. Compilation is done only once.

Why sawSocketStreamer?

Why not ROS node? The sawSocketStreamer is essentially a dynamically linked library to enable streaming for dVRK or da Vinci. When the mixed reality application is required to be real-time, such design minimizes the overhead. For exmaple, the real-time joint states can be pulled from the robot and streamed out directly with sawSocketStreamer. But in the case of a ROS node implementation, the joint states have to be first converted to ROS message, and buffered.

However, ROS is indeed super easy to use. We plan to implement a ROS bridge to relay joint messages. Another advantage of ROS bridge is that, rosbag will be supported and help users to record and replay robot trajectories.

Never-Blocking

For AR/VR application, the most important aspect in my opinion is the refresh rate. A laggy AR app gets people bored immediately. A laggy VR app drives people physically uncomfortable. dVRK-XR optimizes the refresh rate of the AR/VR applications by:

- reducing meshes of robot

- UDP receiver runs on separate thread (not the Unity rendering thread)

- streaming at framerate higher than rendering, to reduce latency

The sawSocketStreamer is non-blocking, separate-threaded to the dVRK or da Vinci as well, due to the nature of CISST/SAW framework. It adds minimum overhead to the normal operation of the robot.

dVRK-XR is minimum-invasive to the robot and the users.

Unity

Unity is a great platform for AR/VR platform. It wraps up the device-specific APIs. Most major AR/VR hardware providers do have collaboration with Unity to enable seemless deployment to their devices. We certainly take advantage of that.

However, certain APIs of UWP are still not wrapped up yet, for example, the UWP socket and theading API. We take care of those in dVRK-XR.

Assembly of Robots in Unity

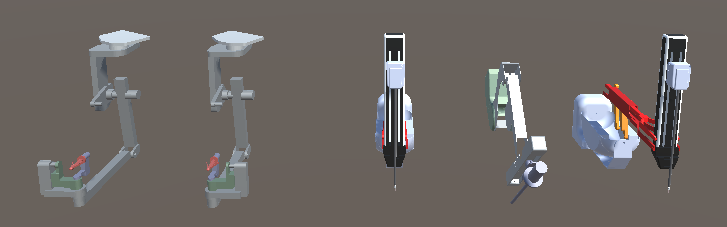

dVRK and da Vinci system include two Patient-Side Manipulators (PSM), two Master Tool Manipulators (MTM), and one Endoscopic Camera Manipulator (ECM). In dVRK-XR, all of the manipulators have their virtual counterparts modeled as Unity Prefabs.

Each manipulator has one C# script that is derived from URDFRobot.cs. Each joint, whether active or passive, is controlled by a script URDFJoint.cs. The joints and meshes are assembled in a tree-like structure in Unity scene. These configurations are adapted from the URDF configurations available in dvrk-ros.

URDFJoint.cs is able to model continuous, revolute, prismatic and passive joints. For each passive joint, it needs to obtain a reference to the associated active joint. Again, these conventions are adapted from ROS.

URDFRobot.cs controls the behavior of the whole arm, including setting the values for all joints when a new message comes in.

Latency

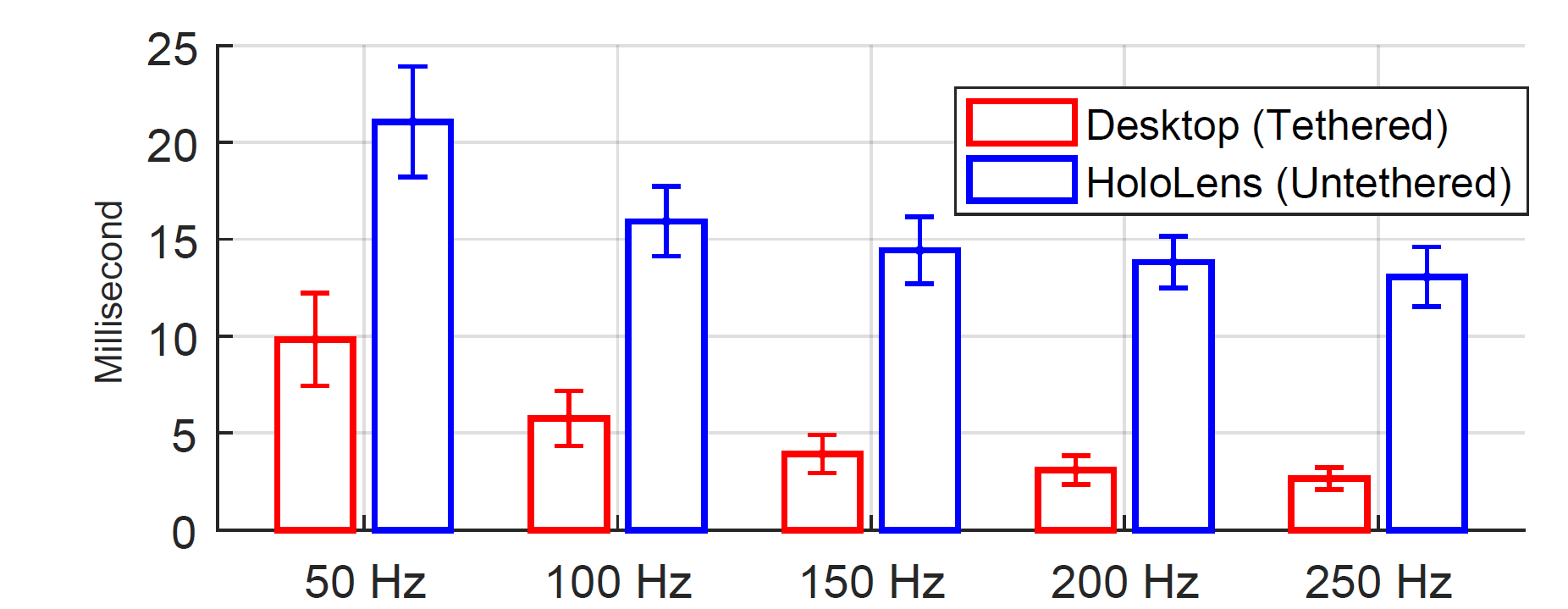

We carefully measured the latency between the robot and mixed reality platforms (tethered and non-tethered: HoloLens). When the streaming rate of sawSocketStreamer is configured to be 100Hz, the latency for tethered system is 5.76 milliseconds. For HoloLens that is under the same wireless network, the latency is 15.93 millisecond. The number is lower than a single frame.

We can say that, the mixed reality visualization with dVRK-XR is real-time.

da Vinci Robot

dVRK-XR supports da Vinci Si, in addition to da Vinci Research Kit. However, it is required to obtain the permission to enable research API on the da Vinci robot (to access the kinematic data). Several institutes of dVRK community has been approved with signed NDA with Intuitive Surgical Inc.

The setup with da Vinci Si is easy enough to do: link sawSocketStreamer with sawIntuitiveDaVinci. Done.

Potential Research

The major objective of dVRK-XR is to facilitate integration of AR/VR with medical robot.

With real-time mixed reality, it can benefit:

- Bedside assistance (we have previously introduced ARssist)

- Surgery staff communication in the OR

- Remote surgery or supervision

- Collaborative surgery

With record and replay scenario, it can benefit:

- Medical education and training

- Improve patient understanding

- Post-surgery visualization and analysis

Possibly, there are still many applications that I have not throught about.

Reference to Publication

For more details, please refer to our paper:

@inproceedings{qian2019dvrkxr,

title={dVRK-XR: Mixed Reality Extension for da Vinci Research Kit},

author={Qian, Long and Deguet, Anton and Kazanzides, Peter},

booktitle={Hamlyn Symposium on Medical Robotics},

year={2019}

pages={93-94}

}

It is our great pleasure to receive the Best Paper Award (2nd place) this year at Hamlyn Symposium.

Thank you for reading!