Teleporting the Expert Surgeon into Your OR

29 Aug 2019The Medical Augmented Reality Summer School (MARSS) 2019 was successfully hosted in Balgrist Hospital, Zurich, during Aug 5-16.

It was a two-week event. Over the second week, participants formed teams and developed medical augmented reality projects. Our team worked on the project Teleporting the Expert Surgeon into Your OR. It was a quite successful Hackathon project with many exciting memories, and luckily, we won the Audience Award of the summer school.

Above is our project demo video. In this blog post, I would like to share with you my experiences of this project and the summer school.

The Idea

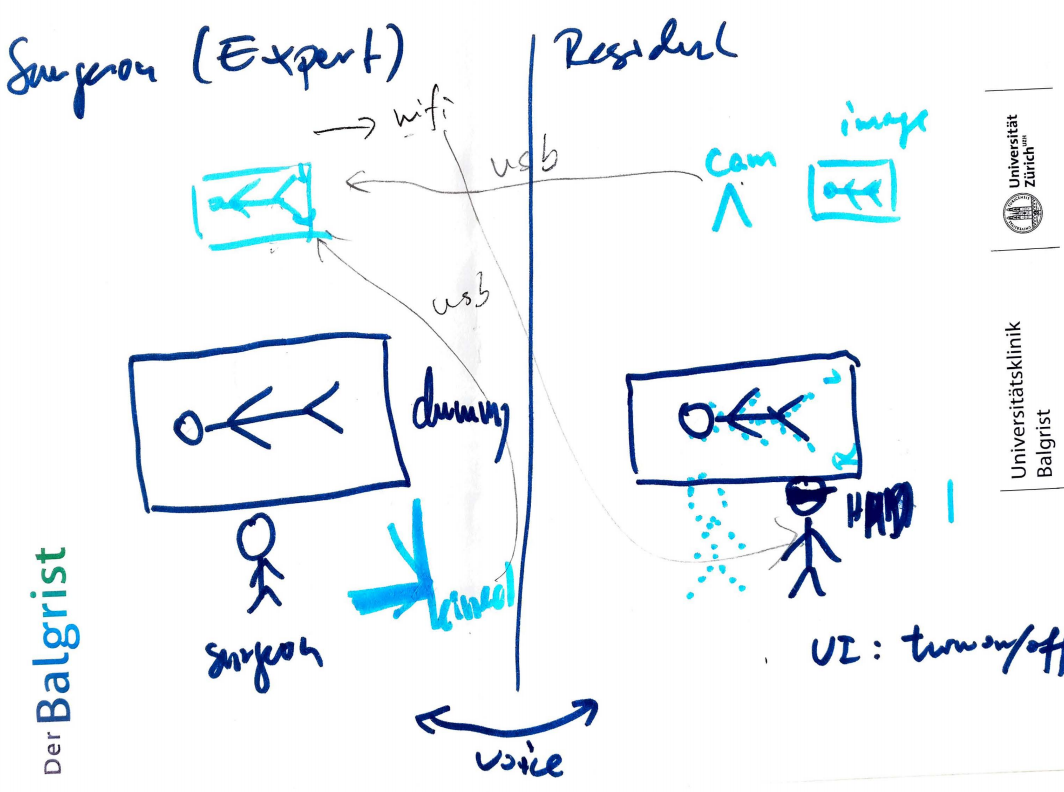

Telepresence is not a new idea in the mixed reality community. Imagine a “3D Skype” app, where you can see your friend walking around in your own 3D space. In the medical domain, such fun idea can be actually very useful. We propose to teleport an expert surgeon into the operating room of a less experienced young surgeon. Via realistic 3D telepresence, the expert surgeon can explain to the young surgeon about the procedure, and provide hand-over-hand guidance.

Here is the picture of our project illustration during brainstorming.

New Toys

Everyone gets his or her own excitement from the summer school. For me, the most exciting part is to have hands-on experience on the new or even unreleased devices: Azure Kinect and HoloLens 2. Thanks to Nassir, Microsoft is convinced to borrow us a few HoloLens 2 devices for development.

The pipeline of our system is pretty clear:

- With Azure Kinect, we capture the real-time RGB and depth image of the surgeon.

- Substract the background of the depth image

- We convert the RGB and depth image into a colored point cloud, with proper calibration parameters.

- The point cloud is streamed to the HoloLens

- Visualize the point cloud on HoloLens.

The GPU of HoloLens 2 is much improved over the first generation, which could support the rendering of MORE points. While I was developing ARAMIS (to appear in MICCAI 2019), where a point cloud is streamed to HoloLens 1, the major performance bottleneck I identified is the visualization of points, instead of the bandwidth of streaming. HoloLens 2 perfectly solves this by offering a better GPU.

The field-of-view of HoloLens 2 is great. It becomes even more obvious when I came back in the lab and put on HoloLens 1. The interaction paradigm with HoloLens 2 is completely different. It is so natural that you can interact with holograms with your intuition. The system understands your intention very well by tracking your hands.

The Iterations

We went through three development phases within the Hackathon week.

In the first phase, we developed a fake PC-based server to generate arbitrary point cloud, and a point cloud client on Unity Editor. The first phase sets a starting point for everyone to separately work on their own parts.

In the second phase, Federica worked on the PC server, including point cloud generation from Azure Kinect, background substraction, interations to tune the behavior of the server. I worked on the HoloLens 2 program to receive, and visualize the point cloud. Arnaud worked on the user interation on HoloLens 2 using MRTK. Christ worked on the clinical part and designed the demo.

In the third phase, everything got combined together, optimized and we made the demo video (of course, over the night).

The Demo

The last day of summer school was dedicated to demos. We saw the excitement from the faces of our audience.

Dr. Farshad acting the expert surgeon (he is) to his fellow:

A virtual me shaking hand with participants:

A virtual me doing high-five with participants:

Our Team

I feel very lucky to work with these enthusiastic people during the summer school.

We are group 14.

- Team mentor: Prof. Ulrich Eck.

- Clinical partner: Dr. Christian Hofsepian

- Industrial partner: Dr. Federica Bogo

- Engineering student: Arnaud Allemang-Trivalle and me.

Thank you for reading!